Reconsidering Writing Pedagogy in the Era of ChatGPT

Lee-Ann Kastman Breuch, Asmita Ghimire, Kathleen Bolander, Stuart Deets, Alison Obright, and Jessica Remcheck

Study Methods

Given this backdrop of scholarly discussion, we were curious about what undergraduate students thought about ChatGPT. Our study best resembles a combination of usability testing and contextual interviews. We asked all participants to complete a set of five ChatGPT tasks from which we gathered quantitative and qualitative data. Following these tasks, we asked a number of contextual interview questions to learn more about student reactions to ChatGPT as a tool for academic writing. Therefore, students were invited to complete writing tasks, read ChatGPT responses, and provide quantitative and qualitative comments about what they read. Our study was guided by the following research questions:

- How are undergraduate students understanding ChatGPT as an academic writing tool?

- To what extent are students incorporating ChatGPT into their writing product(s)?

- How are students thinking about ChatGPT in their writing process?

In designating an academic writing focus, our prompts were limited to verbal writing tasks; that is, prompts did not include content related to coding assistance, problem sets, or other types of multimodal writing activities. We recruited students through email message invitations made through the Undergraduate Office of the College of Liberal Arts, Department of Writing Studies, and messages to students from instructors in writing classes. The study was approved by the Institutional Review Board, and was granted exemption (STUDY00018525).

Our research team conducted usability sessions virtually using Zoom video conferencing software with individual student participants. All student participants were given a consent form which included permission to record the usability session. During the session, one moderator would lead the session and give the participant remote control access to a ChatGPT session accessed through an account managed by the research team. We made this decision to minimize the risk that participants would have to share personal information with an outside organization. In addition to the moderator, additional team members were present to observe and take notes during the session. Each session included an introduction and overview of the study, an explanation of consent and confidentiality, completion of a background questionnaire, completion of five writing tasks using ChatGPT with rating and open comment questions, a product reaction card exercise where students selected five words to describe their experience, and a debriefing interview. Sessions each lasted about sixty minutes. Full descriptions of the test materials are provided in Appendix A. Participants were offered a monetary incentive, a $15 gift card, for participating in the study. Initially, 49 students responded to the invitation; however, several student participants were not able to complete the session due to time conflicts. Others completed parts of the session but not the entire session. A total of 32 students completed the usability session, and participants represented 17 academic majors within the College of Liberal Arts and 5 academic majors in other programs at the university.

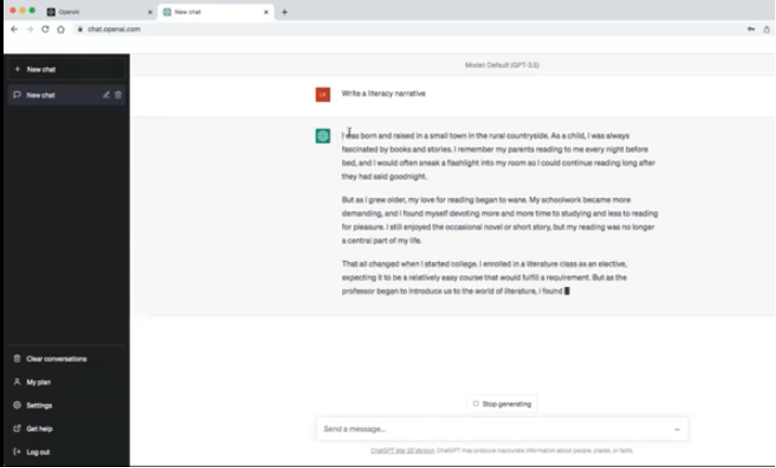

The usability sessions included five scenario tasks that were meant to simulate academic writing assignments that students may encounter in their classes, whether in a first-year writing course, a discipline-specific course, or a designated “writing-intensive” course. We recognize the inherent limitations of a usability test for completing this research, which is that students may be more or less likely to reveal their true thoughts about ChatGPT texts while in an academic setting due to their fears of academic misconduct. Participants received a scenario and a relevant prompt and were asked to type the prompt verbatim into ChatGPT. As shown in Figure 1, once entered, the prompt is placed next to a red box on the screen followed by a green box in which ChatGPT generates a response.

The scenarios and prompts are described below, and media of each scenario and task is provided:

- You have heard that ChatGPT can write essays. You decide to ask ChatGPT to “write a literacy narrative” which is a prompt you received in an undergraduate writing class for an upcoming assignment. [insert Task 1 video here]

- You are writing a research paper for an undergraduate class and decide to ask ChatGPT to “summarize research about the use of computers in higher education between 1970 and 2020.” [insert Task 2 video here]

- Provide a list of best practices in health writing. [insert Task 3 video here]

- Enter a prompt in ChatGPT that is similar to a paper topic that would be common and/or expected in your major. [enter Task 4 video here]

- Analyze the role of the Jesuits in China in the 16th-18th centuries, particularly in regard to the technology of the telescope. Include as much detail as possible and use citations in Chicago Style. [insert Task 5 video here]

For each scenario and prompt, participants were asked to “think out loud” and share any thoughts about the ChatGPT texts they were viewing. We then asked students to rate each ChatGPT text on a scale of 1-5 (1= low; 5=high) regarding the following questions:

- How well does the ChatGPT text meet your expectations?

- How likely would you be to use this ChatGPT text unaltered as your academic homework?

- How likely would you be to use this ChatGPT text not as unaltered but rather to generate ideas about organization, content or expression for a future draft you might write?

- How satisfied are you with this ChatGPT text?

Moreover, in a debriefing interview, we asked participants additional questions such as :

- What was your first impression of ChatGPT?

- What do you like about the ChatGPT texts produced?

- What do you not like about the ChatGPT texts produced?

- How do you rate the credibility of ChatGPT texts on a scale of 1 to 5 with 1 being low credibility and 5 being high credibility?

- How do you rate the relevance of ChatGPT texts on a scale of 1 to 5 with 1 being low relevance and 5 being high relevance?

- How likely are you to use ChatGPT in an academic class? Why or why not?

- What questions, if any, does ChatGPT raise for you?