Stylistics Comparison of Human and AI Writing

Christopher Sean Harris, Evan Krikorian, Tim Tran, Aria Tiscareño, Prince Musimiki, and Katelyn Houston

Methodology

To conduct an Edward PJ Corbett-fashioned style analysis of student writing under the hypothesis that writing prompt design and technology influence the complexity of student and AI-generated texts, we collected 66 samples written in a variety of genres (essays, I-search essays, letters, annotated bibliographies, instructions, literary critiques, exam answers, and interviews) by students in each university academic level, from developmental first-year writing to master’s degree courses. Given that we collected writing samples that were submitted for course grades, we received IRB exemption.

We opted not to evaluate one submitted handwritten text due to the labor involved. We opted not to evaluate every annotated bibliography, but did evaluate one, because GAI often fabricates sources, and annotations are often quotation-laden and stylistically inconsistent. video.

To test GAI’s writing capabilities, to ascertain whether we should use the instruction genre, and to collectively formulate a process for working with AI prompts, we asked Google Bard and GPT-4 to write instructions for how to make peanut butter and jelly sandwiches. **The video here** explains how AI writing works and how we wrangled AI with the instructions. We need to re-record this video because it only captured one corner of the screen and two microphones were on. We streamed videos so they were stored in a safe place.

We asked GAI to incorporate images into sample texts to evaluate how accurately it would do so, as some of our human-generated texts had images. Google Bard could integrate images, with citations, or suggest appropriate images at certain junctures. GPT-4 could suggest appropriate images at certain junctures, and in one case, it suggested images when it was not prompted to do so. We opted not to ask GAI to include images due to these differences.

All of the human texts were submitted for grades between 2017 and 2023 in English major and first-year writing program courses at California State University, Los Angeles (CSULA), a Hispanic-Serving Institution with 73.18% Hispanic, 11.18% Asian, 5.48% white, and 3.93% black students enrolled in Fall 2022. About 70% of students are first-generation college students (Student Demographics, 2023). By comparison, the United States population is 19.1% Hispanic and 58.9% white (Quick Facts, 2023). Of citizens ages 18-64 in California, 46.% can speak a language other than English and 28% speak English “very well” while 17.8% of those who primarily speak Spanish at home speak English “very well” (California Language and Education, 2023).

California language demographics and the demographics of CSULA students indicate that the sample population is linguistically complex though qualified to garner admission to the California State University system, so any assumed linguistic prowess of English majors is balanced by low self-efficacy in speaking English. Given that generative AI expurgates dialectical markers from texts, this study measures a diverse human population against homogenized machine writing. Future similar studies would benefit from categorizing samples with dialectical and linguistic markers.

After we collected the model human texts, we stored them in a shared, secure university drive then treated them in the following manner.

- Texts were coded, in their file names, by batch and grade level.

- All identifying information was removed from each text.

- Given a 12-point font and one-inch margins, and to avoid analyzing multiplicities of voices, direct quotations longer than two lines were removed. Paragraphs consisting of more than 75% direct quotation were removed (Corbett and Connors, 1999, p. 360).

- Titles, headings, captions, footnotes, references lists, and parenthetical citations were removed, as they are not directly linked to converting thought to text.

- Excessive paragraph markers were removed, and the start of each paragraph was marked with three equal signs (“===”) to create a prominent visual identifier.

- The sophomore-level texts were written for a second semester, first-year writing course for engineering and computer science majors: Introduction to Technical Writing. The texts collected from that course included essays on ethics in professions, sandwich-making instructions, and analyses of instructional websites. The master’s level texts were critical introductions for course proposals. These genres often include several subheadings and bulleted lists. When evaluating the texts, we considered lists of items as one sentence and lists composed of sentences as part of the preceding paragraph.

- In Microsoft Word, we enabled “Show Readability Statistics” and used that to record each texts’

- Number of sentences,

- Number of paragraphs,

- Words per sentence,

- Words per paragraph,

- Average sentence length,

- Average paragraph length in sentences,

- Flesh-Kinkaid Reading Ease, and

- Grade level.

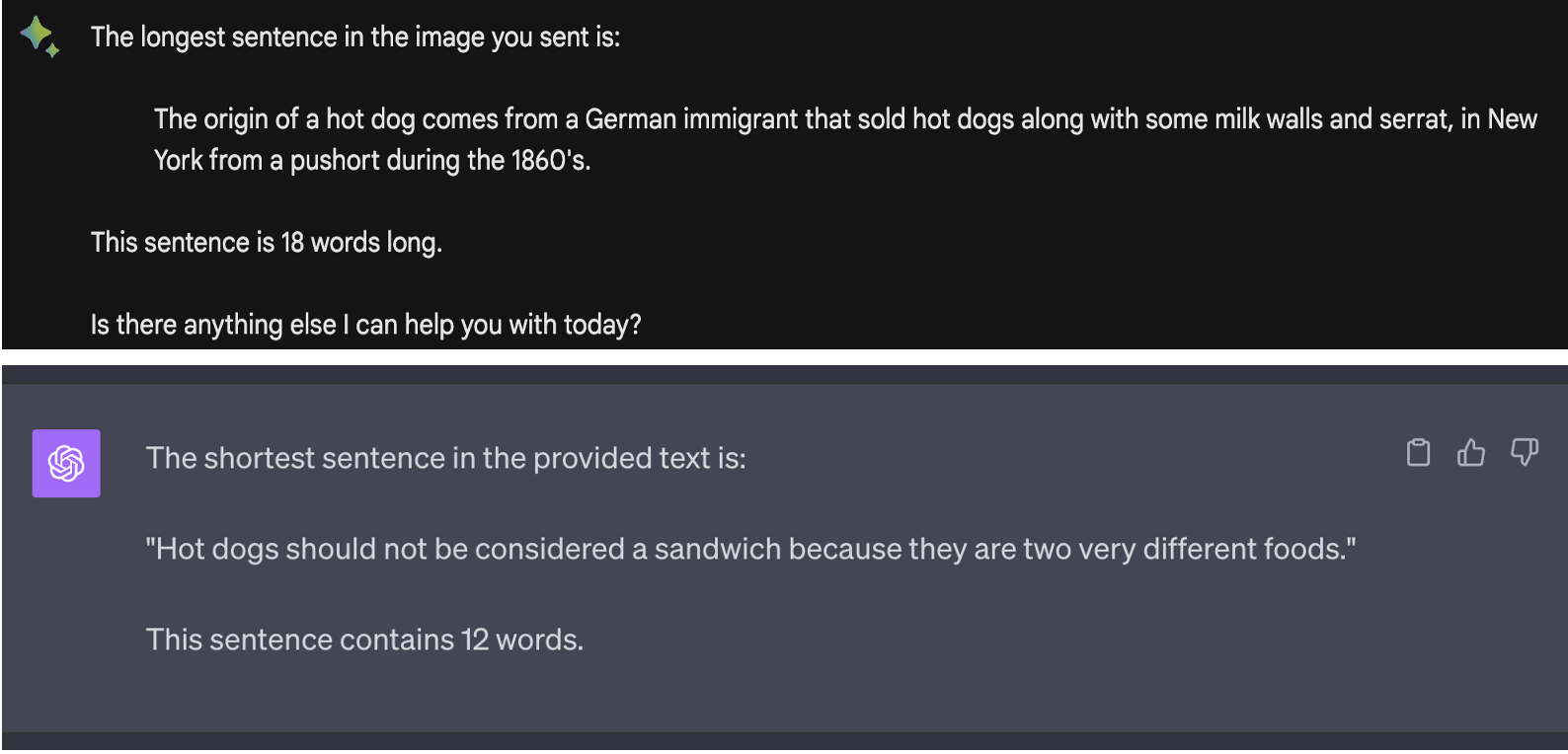

- Given that GAI software counts textual features such as letters, words, punctuation, and spaces by issuing each one a token and then counting them, it currently cannot effectively count words or identify longest and shortest sentences because it considers the tokens representing spaces and punctuation as words, even when prompted not to do so. With an API of GPT-4 and extensive training, the AI could potentially learn how to measure these characteristics.

- Before collecting additional information, we consulted a sample size calculator to determine a sample with 95% confidence and a 10% margin of error. We set the margin of error at 10% to account for the complexity of identifying sentence openers, as we may errantly identify the subject of a clause as the subject of a sentence. The calculator suggested we analyze 40 texts for the additional features.

- We used a random number generator to identify which texts to analyze further. To ensure consistency across grade levels, we generated numbers amounting to between 40-60% of each grade level’s sample size and ended up evaluating 11 first-year, 8 sophomore, 11 junior, 6 senior, and 3 master’s texts.

- We used a text length sorter to identify paragraph features since the sorter counts by hard enters.

- Each text was disaggregated into a list of numbered sentences to facilitate manual coding.

- We again used the text length sorter to find the longest and shortest sentences.

- In this second analysis, we identified the following:

- The longest sentence in words,

- The shortest sentence in words,

- The longest paragraph in sentences,

- The shortest paragraph in sentences, and

- Sentences beginning with their subjects (in the form of nouns and noun phrases that might be paired with articles and determiners mixed with adjectives).

- Simple sentences. In cases with sentence fragments or run-on sentences, they were evaluated at face value. If a sentence had zero independent clauses, it was categorized as a simple sentence.

- After training, GAI could not correctly identify parts of speech. We manually identified sentence openers and sentence types. Image

After the human texts were coded, we identified each one’s core topic or thesis, which we then used to prompt Google Bard and GPT-4 to write college-level essays. Initially, we had hoped to collect the assignment prompts for each human text, but that proved too difficult for a range of reasons. We followed the same procedure as for the human-written texts, except for the following practices.

- We created a crib sheet with our primary prompts to ensure we consistently requested texts.

- Each prompt began with, “Assume you are a [first-year, sophomore, junior, etc.] college student.” though we added markers such as “majoring in English” or “Majoring in electrical engineering” when human writers identified their major or when we evaluated courses in the English major core.

- In most cases, GAI was prompted to write more or to write more professionally. In the case of Google Bard, we often asked it to, “Revise that so it has fewer bulleted lists and more paragraphs.” As one way to circumnavigate the computational complexities of writing coherently, it would offer bulleted lists.

- Google Bard and GPT-4 do not generate texts longer than about 800 words because, at that point, the texts become inaccurate, too complex, or incomplete. Users can prompt the GAI to continue their texts or to expound upon certain claims, but doing so would disrupt the integrity of the texts with introductions and conclusions that the GAI produces, so we did not push the GAI to write 1900 words if, for example, the human did. However, in one case, Google Bard would not include an introduction or conclusion for a critical introduction to a course proposal. Finally, when asked to do so, it provided them as a separate text, so we added them to its original text (Somoye, 2023; Bard FAQ, 2023).

- While we did ask GAI to respond to certain texts such as articles and novels when appropriate, we did not directly instruct it to provide direct quotations due to challenges with reliability, complexity, and length. Both GPT-4 and Google Bard offered statements indicating that their direct quotations were representative and not always real.